Is Your Market Ready for You? How Market Maturity Shapes Your B2B GTM Success

Is your GTM strategy aligned with your market’s maturity? Learn how to assess your market’s stage and execute a strategy that drives predictable...

Cris S. Cubero

Over the last two years, AI has moved from experimental curiosity to assumed baseline inside SaaS organizations. Founders and revenue leaders are now surrounded by narratives of companies scaling to eight figures with AI, teams replacing headcount through automation, and complex workflow diagrams presented as proof of operational sophistication. The message is implicit but clear: serious teams are moving fast, automating aggressively, and stacking tools. If you are not doing the same, you risk falling behind.

Yet when we look closely at how AI is actually playing out inside real SaaS teams, a different picture emerges: AI is being layered onto a strained, misaligned, and inefficient operating model. And rather than correcting the root issues, AI amplifies them. What many teams are experiencing today is not a failure of technology, but the exposure of structural problems that were previously masked by manual effort.

This is the reckoning AI has introduced. It is not forcing teams to move faster. It is forcing them to confront how work is actually done.

A defining feature of the current market is the rise of what can best be described as a workflow arms race.

Conversations about AI adoption are dominated by tools, automations, and increasingly elaborate system diagrams. Entire strategies are framed around which platforms are connected, how many steps a workflow contains, or how little human involvement remains. The complexity itself becomes the signal.

What gets lost in this framing is whether any of that complexity meaningfully improves decision-making or customer outcomes. Revenue leaders feel pressure to participate in this arms race not because they believe every tool stack represents real leverage, but because the absence of visible AI activity now carries reputational risk. Doing nothing feels irresponsible. Doing something, even without clarity, feels safer.

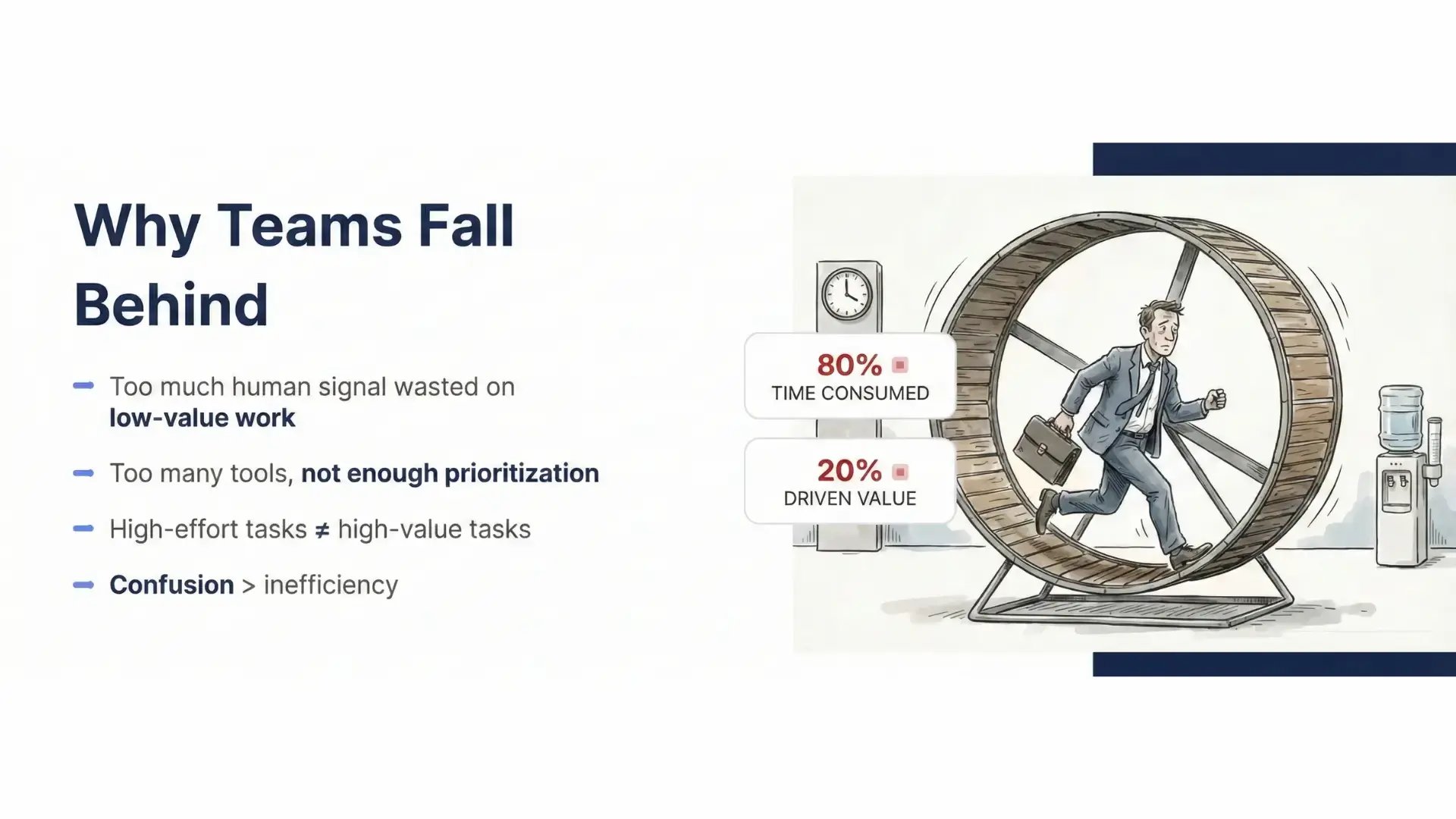

The result is a proliferation of automation before prioritization. Teams build systems without first agreeing on ownership, definitions, or success criteria. Reporting is automated before data can be trusted and processes are scaled before they are understood. From the outside, this looks like momentum, but internally, it often feels like noise.

AI adoption in this form is performative. It optimizes for visibility rather than value, and it substitutes motion for judgment.

One of the most persistent assumptions in AI adoption is that speed is inherently beneficial. Faster execution, faster output, faster iteration are treated as universal goods. AI reinforces this belief by dramatically compressing the time required to complete tasks that once took hours or days.

What this assumption ignores is that speed magnifies whatever system it is applied to. When the underlying model of work is sound, AI accelerates insight and reduces friction. When it is not, AI accelerates misalignment, error propagation, and rework. Teams end up moving quickly in the wrong direction, generating more output while accumulating more downstream cost.

This is why automating the wrong thing first is so damaging. When KPI reporting is automated before CRM data is reliable, the result is not clarity but false confidence. When content creation is scaled before ICPs and personas are clearly defined, the result is not reach but dilution. When speed is prioritized ahead of judgment, teams spend increasing amounts of time cleaning up after their own systems.

AI does not correct direction but commits organizations to it more forcefully and with less room for recovery.

Nowhere are these dynamics more visible than in content and go-to-market execution. AI has dramatically lowered the cost of production, making it possible to generate large volumes of content, messaging, and assets in a fraction of the time previously required. In response, many teams have equated productivity with throughput, filling newly freed capacity with more output rather than higher-quality work.

The result is a market saturated with content that is technically competent but strategically empty. Messaging converges, blogs sound interchangeable, thought leadership loses its edge… What teams gain in volume, they lose in authority.

This is not a failure of creativity or effort but a consequence of misallocated judgment. Instead of using AI to remove low-value work and create space for high-signal thinking, teams often backfill that space with additional low-judgment tasks. AI becomes a mechanism for accelerating mediocrity rather than enabling differentiation.

In a market where everyone can produce “good enough” instantly, “good enough” stops being an advantage. Signal becomes the scarce resource, and most organizations are unintentionally eroding it.

One of the most counterintuitive effects of AI adoption is the renewed importance of fundamentals. Clean data, clear definitions, aligned incentives, and shared understanding of value were always important, but they were often tolerated as imperfect. Manual processes absorbed inconsistencies and human judgment compensated for gaps.

Automation removes that buffer. AI systems require inputs to be explicit, structured, and reliable. When they are not, automation does not resolve the ambiguity; it operationalizes it. Misalignment between sales and marketing over what constitutes a qualified lead becomes embedded in workflows. Inconsistent data definitions propagate through dashboards and reports. Decisions are made faster, but on weaker foundations.

This is why teams that skipped foundational work feel the impact of AI most acutely. The unglamorous work of data hygiene, ownership, and sequencing is no longer optional overhead. It has become a prerequisite for leverage.

AI has not reduced the importance of fundamentals. It has increased the cost of ignoring them.

The most consequential mistake we see is conceptual rather than technical. AI adoption is still widely treated as a technology rollout, when in reality it is a change management problem.

Organizations introduce tools and expect behavior to follow. They announce new capabilities without redefining priorities. Marketing teams add automation without explicitly deciding what work should stop. And when outcomes fail to materialize, they add more tools rather than address the underlying operating model.

But tools do not change behavior. Clarity does. Priorities do. Explicit decisions about where human judgment is required, where automation is appropriate, and where work should be eliminated entirely do.

AI forces leaders to confront questions they could previously defer: what actually matters, who decides, and which activities no longer deserve attention. Treating AI as software implementation avoids those questions. Treating it as organizational change requires answering them.

A common frustration among revenue teams is that AI has not reduced workload. In many cases, it has increased it. This is not because AI fails to create efficiency, but because efficiency is immediately converted into higher expectations.

When leaders see tasks completed faster, output becomes the new baseline. Tool management introduces new overhead, review cycles expand, and exceptions accumulate. Without a mechanism to intentionally remove work, AI becomes additive rather than subtractive.

Teams end up running faster on a treadmill that never slows down. The problem is not a lack of capacity but a lack of structural permission to stop.

As execution becomes easier to replicate, competitive advantage is moving away from volume and toward irreducible human judgment. Decisions around positioning, pricing, narrative, coaching, and contextual tradeoffs are increasingly where differentiation lives. These are precisely the areas that cannot be copied by adopting the same tools as competitors.

Yet in many organizations, this work is crowded out first. High-judgment tasks are deprioritized in favor of scalable output, even though they are the source of long-term advantage. AI makes this tradeoff more visible, but it does not resolve it automatically.

Winning SaaS teams use AI to remove work that does not require judgment and deliberately protect the work that does. They do not automate indiscriminately. They automate strategically.

Every effective AI strategy ultimately revolves around a single question: what work deserves human judgment, and what does not?

This question forces prioritization, exposes waste, and legitimizes stopping work that persists only by habit. It reframes AI from a productivity tool into a decision-making lens.

SaaS teams that avoid this question remain trapped in superficial adoption. Teams that answer it gain a durable framework for deciding what to automate, what to scale, and what to protect.

AI has not created new problems for revenue teams, it has removed the ability to ignore existing ones. The organizations that will thrive are not those that move fastest or adopt the most tools, but those that make the clearest decisions about how work should be done.

The future of AI in revenue is not more automation. It is better judgment, deliberately applied.

AI breaking the revenue playbook is the problem Antoine and Yusuf have been working through with SaaS teams over the past two years.

In the session Future-Proof Your Revenue Team: A 2026 Planning Session for Founders & Revenue Leaders, they walk through how to translate these ideas into concrete decisions using the Syntropy Matrix—the framework they use to decide what to automate, what to scale, and what should stop entirely as teams plan for 2026.

The Syntropy Matrix framework is not about adding more AI tools, but about systematically deciding what to automate, what to scale, and what should stop entirely as teams plan for 2026.

.png?width=1920&height=1080&name=syntropy%20matrix%20(1).png)

The session is practical, opinionated, and grounded in real revenue team examples.

Watch the full training here: Future-Proof Your Revenue Team: A 2026 Planning Session for Founders & Revenue Leaders

Is your GTM strategy aligned with your market’s maturity? Learn how to assess your market’s stage and execute a strategy that drives predictable...

Struggling with enterprise SaaS marketing? Learn why selling is harder, the challenges SaaS companies face, and how to win with the right strategy.

Stand out from the competition by adding ABM to your growth strategy. Learn the key benefits of implementing it in today’s B2B SaaS competitive...